Transparency

Reconstruction and synthesis of refractive geometry

Single-Shot Analysis of Refractive Shape Using Convolutional Neural Networks

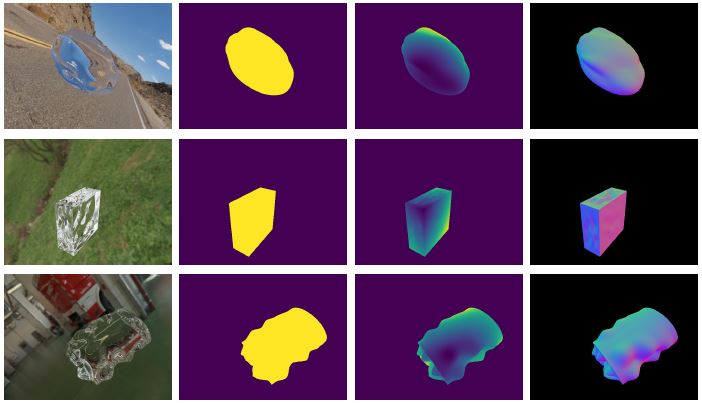

The appearance of a transparent object is determined by a combination of refraction and reflection, as governed by a complex function of its shape as well as the surrounding environment. Prior works on 3D reconstruction have largely ignored transparent objects due to this challenge, yet they occur frequently in real-world scenes. This paper presents an approach to estimate depths and normals for transparent objects using a single image acquired under a distant but otherwise arbitrary environment map. In particular, we use a deep convolutional neural network (CNN) for this task. Unlike opaque objects, it is challenging to acquire ground truth training data for refractive objects, thus, we propose to use a large-scale synthetic dataset. To accurately capture the image formation process, we use a physically-based renderer. We demonstrate that a CNN trained on our dataset learns to reconstruct shape and estimate segmentation boundaries for transparent objects using a single image, while also achieving generalization to real images at test time. In experiments, we extensively study the properties of our dataset and compare to baselines demonstrating its utility.

Scene reassembly after multimodal digitization and pipeline evaluation using photorealistic rendering

Transparent objects require acquisition modalities that are very different from the ones used for objects with more diffuse reflectance properties. Digitizing a scene where objects must be acquired with different modalities, requires scene reassembly after reconstruction of the object surfaces. This reassembly of a scene that was picked apart for scanning seems unexplored. We contribute with a multimodal digitization pipeline for scenes that require this step of reassembly. Our pipeline includes measurement of bidirectional reflectance distribution functions (BRDFs) and high dynamic range (HDR) imaging of the lighting environment. This enables pixelwise comparison of photographs of the real scene with renderings of the digital version of the scene. Such quantitative evaluation is useful for verifying acquired material appearance and reconstructed surface geometry, which is an important aspect of digital content creation. It is also useful for identifying and improving issues in the different steps of the pipeline. In this work, we use it to improve reconstruction, apply analysis by synthesis to estimate optical properties, and to develop our method for scene reassembly.

Publications

Material-based segmentation of objects [2019]

J. D. Stets, R. A. Lyngby, J. R. Frisvad, A. B. DahlImage Analysis (Proceedings of SCIA 2019) , Lecture Notes in Computer Science, vol. 11482, 152-163

Single-shot analysis of refractive shape using convolutional neural networks [2019]

J. D. Stets, Z. Li, J. R. Frisvad, M. ChandrakerIEEE Winter Conference on Applications of Computer Vision , 995-1003

Scene reassembly after multimodal digitization and pipeline evaluation using photorealistic rendering [2017]

J. D. Stets, A. Dal_Corso, J. B. Nielsen, R. A. Lyngby, S. H. N. Jensen, J. Wilm, M. B. Doest, C. Gundlach, E. R. Eiriksson, K. Conradsen, A. B. Dahl, J. A. Bærentzen, J. R. Frisvad, H. AanæsApplied Optics , 56(27), 7679-7690

Our 3D vision data-sets in the making [2015]

H. Aanæs, K. Conradsen, A. Dal_Corso, A. B. Dahl, A. D. Bue, M. E. B. Doest, J. R. Frisvad, S. H. N. Jensen, J. B. Nielsen, J. D. Stets, G. VogiatzisConference on Computer Vision and Pattern Recognition 2015 , Institute of Electrical and Electronics Engineers

Accuracy in robot generated image data sets [2015]

H. Aanæs, A. B. DahlImage Analysis (Proceedings of SCIA 2015) , Springer, Lecture Notes in Computer Science, vol. 9127, 472-479

Single-Shot Analysis of Refractive Shape Using Convolutional Neural Networks

Introduction

Light refracts and reflects at an interface between two materials. For transparent objects composed of material such as glass, ice or some plastics, scattering and absorption are negligible, so that light passes through the material and we observe the effect of surface refraction and reflections. Thereby, the appearance of a refractive object is a distorted image of its surroundings. This means that we cannot reconstruct the shape of a refractive object using a local model, which makes solid refractive objects particularly challenging to handle in computer vision. We pick up this challenge and consider shape analysis of refractive objects made of homogeneous glass with a smooth surface, that is, glass with without air bubbles, significant absorption or surface scratches. Such glass objects are common in human-made environments (windows, glasses, drinking cups, plastic containers and so on), which makes it desirable to design a computer vision system able to determine their geometric properties based on a single image.

The physics of refraction and reflection observed for transparent objects is well-understood. Refraction occurs when light passes from one medium to another, the angle of refraction depends on the propagation speed of the light wave in the two media as described by Snell’s law, while the relative fractions of reflection and refraction are described by Fresnel’s equations. The amount of reflection increases with greater angle of incidence. When light is incident on an optically thinner medium at a grazing angle less than the critical angle, total internal reflection occurs. Due to these different types of interaction, the light path undergoes significant deviation from a straight line when passing through a glass object and its appearance becomes a complex combination of light incident from the entire surrounding environment. Thus, it is a challenging task for conventional shape estimation methods to cope with glass objects.

Recent years have seen convolutional neural networks (CNNs) perform well across a variety of computer vision tasks. This includes shape estimation, where encouraging results have been observed for point cloud, depth or normal estimation for opaque objects and diffuse scenes. A few works consider the challenges of complex reflectance, but also for opaque objects. Consequently, we consider the question of whether similar CNN-based approaches are applicable for transparent objects too. But despite the versatility of CNNs in adapting to appearance variations in several computer vision problems, our experiments demonstrate that the gap between opaque and transparent image formation is too vast. This is not surprising, since estimating transparent shape requires decoupling a highly complex interaction between depth, normals and environment map.

Thus, a dataset is needed to specifically train CNNs for estimating the shape of transparent objects. But acquiring such a dataset requires significant expense. On the other hand, shape estimation CNNs trained on synthetic datasets have been demonstrated to generalize well to real opaque scenes. Thus, we present our first contribution, which is a large-scale dataset of glass objects rendered for a variety of shapes under several different environment maps. Unlike several synthetic datasets, the physical accuracy required for accurately representing refractions is very high, thus, we use a GPU-accelerated physically-based renderer. Given such a dataset, it is still an open question whether an end-to-end trained CNN can disentangle the complex factors of image formation to estimate shape. We present our second contribution, which is a CNN for estimating depth, normal maps, and segmentation masks that achieves low prediction errors. But more importantly, we demonstrate that this CNN trained on our dataset also generalizes well to images of real transparent objects.

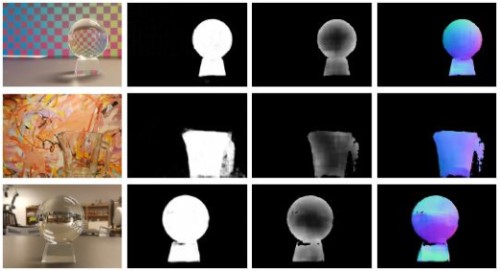

Sample images from the synthetic dataset (RGB Image, Mask, Depthmap and Normalmap).

Dataset

The dataset consists of 80.000 rendered images (480x640px) of approx. 700 shapes. Please cite if you use the data.

| file | description |

|---|---|

| rgb.zip | Rendered RGB images (.png), 3 channels |

| label.zip | Binary mask (.npy), 1 channel |

| depth.zip | Raw depth, not scaled in range 0 to 1 (.npy), 1 channel |

| normal.zip | Normals scaled from -1 to 1 (.npy), 3 channels (XYZ) |

| content.txt | Information about which object and environment map is present in each image (.txt) (image id, object id, envmap id) |

BibTex Reference

@inproceedings{stets2019single,

title={Single-Shot Analysis of Refractive Shape Using Convolutional Neural Networks},

author={Stets, Jonathan Dyssel and Li, Zhengqin and Frisvad, Jeppe Revall and Chandraker, Manmohan},

booktitle={IEEE Winter Conference on Applications of Computer Vision},

year={2019}

}Download Dataset

Scene reassembly after multimodal digitization and pipeline evaluation using photorealistic rendering

Introduction

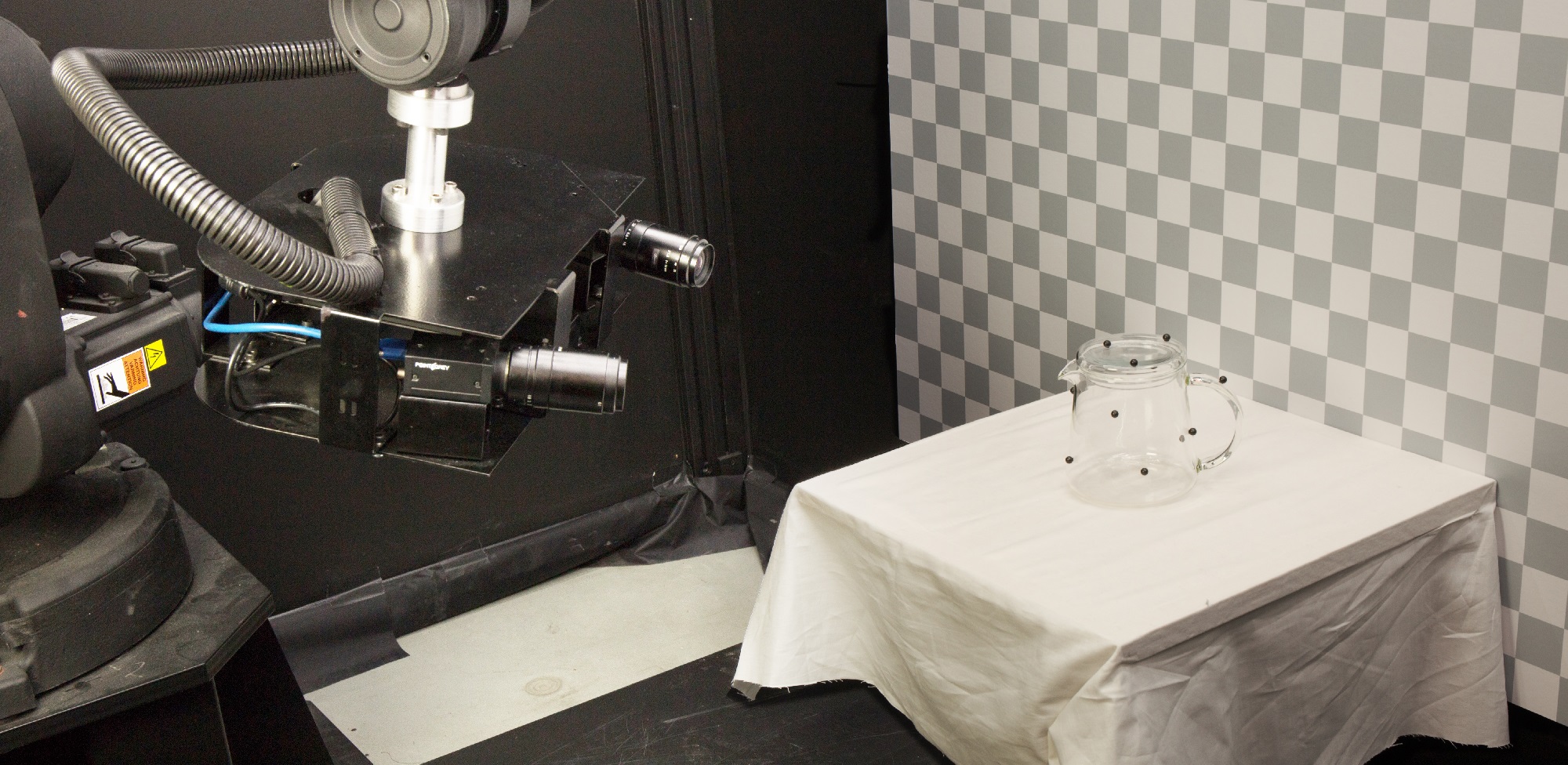

Several research communities work on techniques for optical acquisition of physical objects and their appearance parameters. Thus, we are now able to acquire nearly any type of object and perform a computer graphics rendering of nearly any type of scene. The range of applications is broad and includes movie production, cultural heritage preservation, 3D printing, and industrial inspection. A gap left by these multiple endeavors is a coherent scheme for acquiring a scene consisting of several objects that have very different appearance parameters, together with the reassembly of a digital replica of such a scene. Our objective is to fill this gap for the combination of transparent and opaque objects, as many real world scenarios exhibit this combination. We propose a pipeline for acquiring and reassembling digital scenes from this type of heterogeneous real-world scenes. In addition, our pipeline closes the loop by rendering calibrated images of the digital scene that are commensurable with photographs of the original physical scene (see the results below). This allows for validation and fine-tuning of appearance parameters. The quantitative evaluation we get from pixelwise comparison of rendered images with photographs is a great improvement with respect to validation of the acquired digital representation of the physical objects. When addressing the problem of acquiring a heterogeneous scene, there is an infinite variety of scenes and object types to choose from. So, to make our task feasible, we focus on scenes that combine glassware and non-transparent materials, more specifically, white tablecloth and cardboard with a checkerboard pattern.

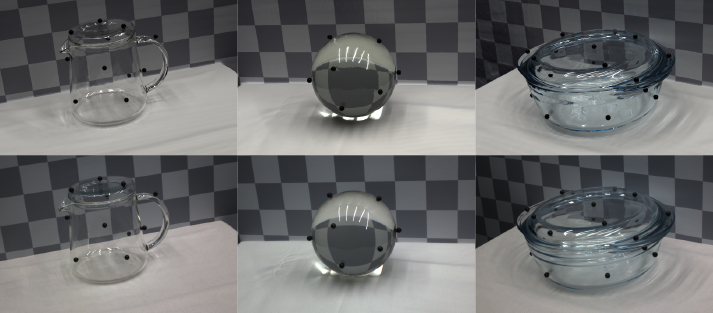

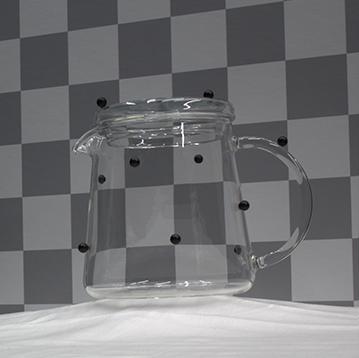

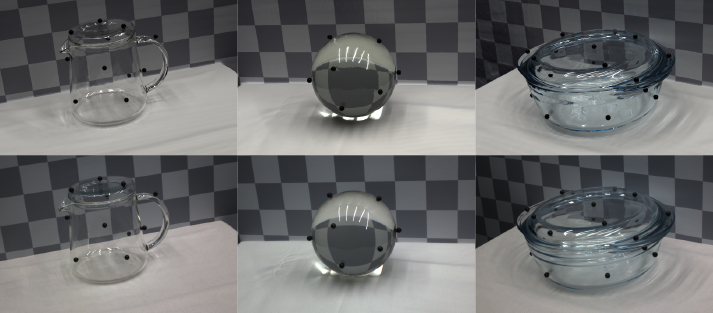

Solid glass sphere

Solid glass sphere

Glass bowl with lid

Glass bowl with lid

Glass teapot with lid

Glass teapot with lid

We made these choices as glass requires a different acquisition modality, the tablecloth BRDF is spatially uniform but not necessarily simple, and the cardboard has simple two-color variation. The latter is particularly useful for observing how light refracts through the glass. The chosen case is also of particular interest, since glass is present in many intended applications of optical 3D acquisition. Considering the highly multidisciplinary nature of our work, we have released our dataset (Links for dataset download is provided on this page). This facilitates further investigation by other researchers of the different steps of our pipeline with the possibility of a quantitative feedback at the end of the process.

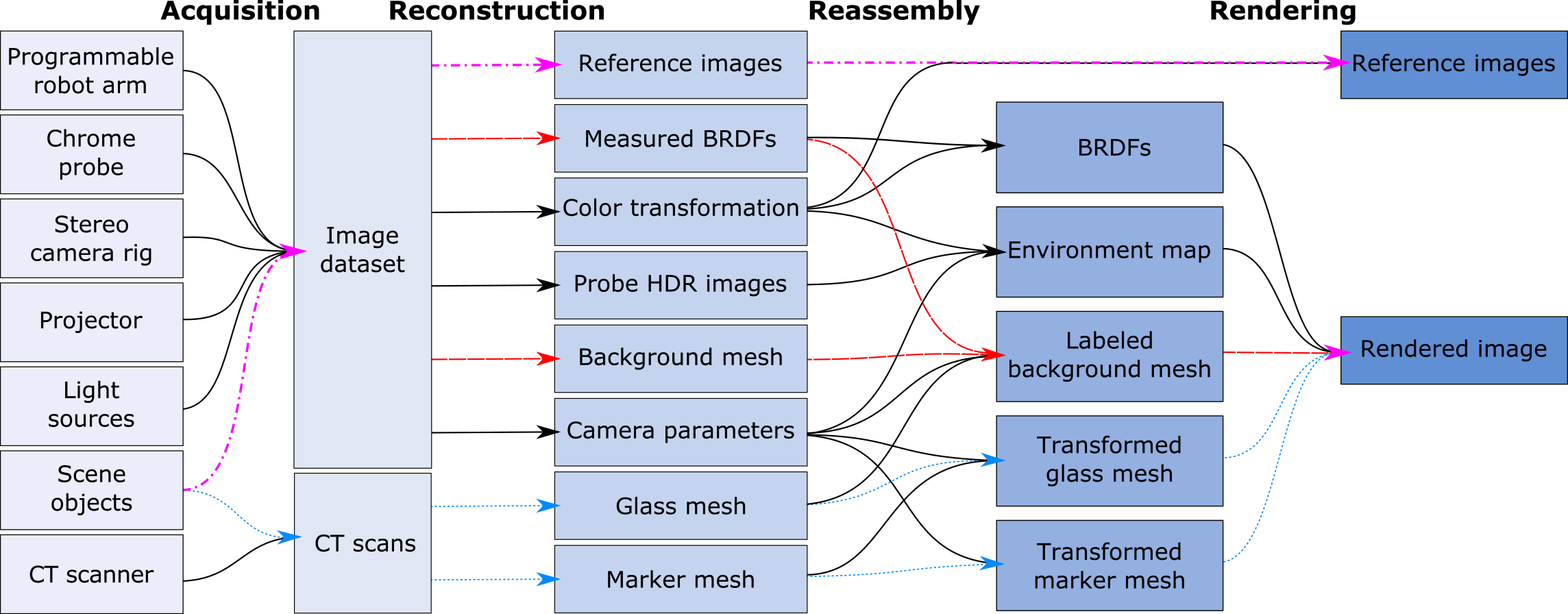

Pipeline

Overview of our digitization pipeline in four main stages: acquisition, reconstruction, reassembly, and rendering. Colored arrows show the path through the pipeline of transparent objects (dotted blue), non-transparent objects (dashed red), and both types together (dotted-dashed magenta).

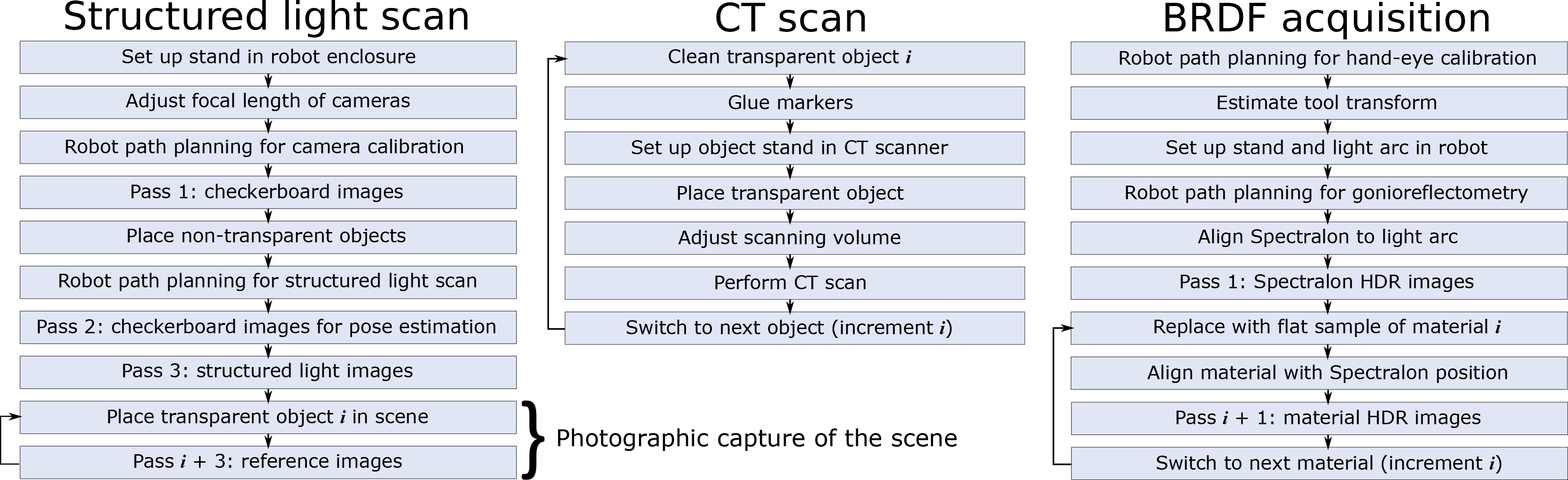

Workflow for scanning the geometry of non-transparent objects and collecting reference images (left), for scanning the geometry of transparent objects (middle), and for measuring material reflectance properties (right).

Video presentation of our pipeline:

Dataset

We provide our dataset for download, please cite if you use the data. README files are included to describe the data. Additional description will be provided soon.

- dataset_full.zip (50.8 GB)

- Partial dataset

- Raw Data

- ct_scans.zip (8.2 GB)

- materials.zip (70.1 MB)

- Image dataset (README)

- calibration_data.zip (10.9 GB)

- scene_data.zip (9.2 GB)

- structured_light.zip (20.3 GB)

- intermediate_data.zip (1.4 GB)

- final_rendering_data.zip (714 MB)

- Raw Data

Results

In the results below, rendered images (top row) are compared with photographs (bottom row). The scenes were digitized using our pipeline and include both glass objects and non-transparent objects (tablecloth and backdrop).

Overlay comparrison of the photograph and the rendered image of the teapot:

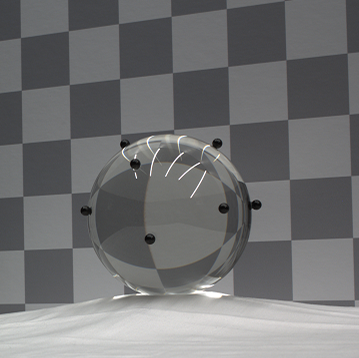

The rendering below exemplify the use of our pipeline for virtual product placement using our digitized glass objects, with estimated optical properties and artifact-reduced removal of markers.

BibTex Reference

@article{Stets:17,

author = {Jonathan Dyssel Stets and Alessandro Dal Corso and Jannik Boll Nielsen and Rasmus Ahrenkiel Lyngby and Sebastian Hoppe Nesgaard Jensen and Jakob Wilm and Mads Brix Doest and Carsten Gundlach and Eythor Runar Eiriksson and Knut Conradsen and Anders Bjorholm Dahl and Jakob Andreas B{\ae}rentzen and Jeppe Revall Frisvad and Henrik Aan{\ae}s},

journal = {Appl. Opt.},

keywords = {Three-dimensional sensing; Optical properties; Color; BSDF, BRDF, and BTDF ; Calibration ; Multisensor methods},

number = {27},

pages = {7679--7690},

publisher = {OSA},

title = {Scene reassembly after multimodal digitization and pipeline evaluation using photorealistic rendering},

volume = {56},

month = {Sep},

year = {2017},

url = {http://ao.osa.org/abstract.cfm?URI=ao-56-27-7679},

doi = {10.1364/AO.56.007679},

abstract = {Transparent objects require acquisition modalities that are very different from the ones used for objects with more diffuse reflectance properties. Digitizing a scene where objects must be acquired with different modalities requires scene reassembly after reconstruction of the object surfaces. This reassembly of a scene that was picked apart for scanning seems unexplored. We contribute with a multimodal digitization pipeline for scenes that require this step of reassembly. Our pipeline includes measurement of bidirectional reflectance distribution functions and high dynamic range imaging of the lighting environment. This enables pixelwise comparison of photographs of the real scene with renderings of the digital version of the scene. Such quantitative evaluation is useful for verifying acquired material appearance and reconstructed surface geometry, which is an important aspect of digital content creation. It is also useful for identifying and improving issues in the different steps of the pipeline. In this work, we use it to improve reconstruction, apply analysis by synthesis to estimate optical properties, and to develop our method for scene reassembly.},

}