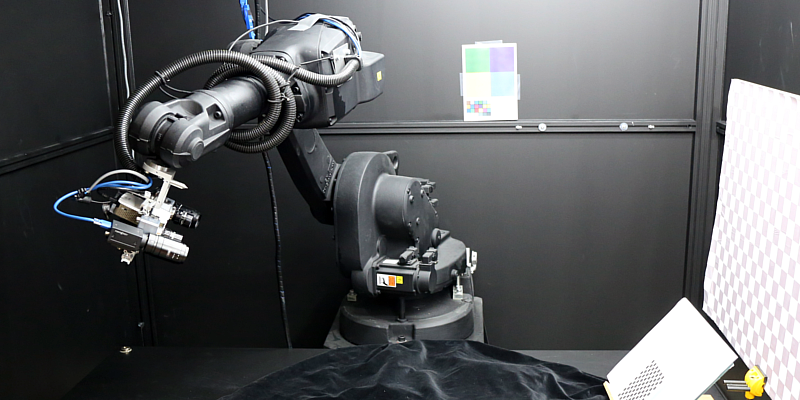

ABB Robot Environment A controlled environment for data collection.

Introduction

This system is made to control as many aspects of the environment as possible, ligting and camera positions in particular. The robot is an ABB IRB 1600 10/1.45m and it has a repeatability of 0.05mm which ensures that the camera positions have a neglible drift between data acquisitions.

Generally the actual positions of the robot are not used but are instead found trough calibrating with a checkerboard. This given a good calibration provides much more precise positions.

The complete system can be interfaced via Python APIs to allow people with little knowledge of robotics to use the system.

Datasets

MVS Dataset

Multi-view Stereo DatasetNRSfM Dataset

Non-rigid Structure from Motion DatasetFeatures

Tool

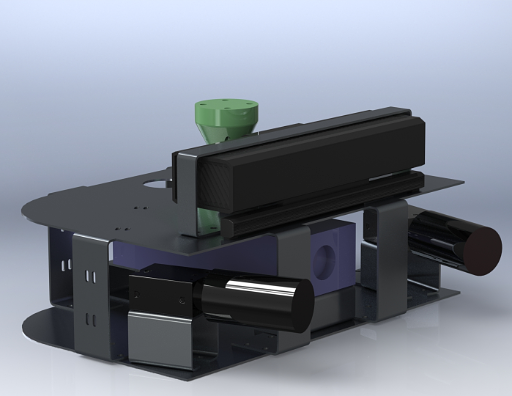

The robot tool is consisting of two 9.1MP Point Grey Grasshopper 3 cameras, a WinTech LightCrafter 4500pro and a Microsoft Kinect Fusion. This setup allow us to make High Quality 3D scans using techniques as Structured Light Scanning and MultiView Stereo, also see http://roboimagedata.compute.dtu.dk/.Environment

The robot is placed in a dark room, where the lighting conditions can be controlled programatically. Two difusor boxes and 5 semi-diffuse 6500K LED light armatures allows for fine control of scene lighting, both direction and intensity can be controlled.Python API

The control framework is made accessible as a Python API for easier interfacing with minimal apriori knowledge about robotics. The robot controller is based on Open ABB API.Lighting Arc

This allows for many different applications such as BRDF estimations see http://brdf.compute.dtu.dk/.Publications

Large-scale data for multiple-view stereopsis [2016]

H. Aanæs, R. R. Jensen, G. Vogiatzis, E. Tola, A. B. DahlInternational Journal of Computer Vision , Springer, 1-16

Our 3D vision data-sets in the making [2015]

H. Aanæs, K. Conradsen, A. Dal_Corso, A. B. Dahl, A. D. Bue, M. E. B. Doest, J. R. Frisvad, S. H. N. Jensen, J. B. Nielsen, J. D. Stets, G. VogiatzisConference on Computer Vision and Pattern Recognition 2015 , Institute of Electrical and Electronics Engineers

Accuracy in robot generated image data sets [2015]

H. Aanæs, A. B. DahlImage Analysis (Proceedings of SCIA 2015) , Springer, Lecture Notes in Computer Science, vol. 9127, 472-479

Large scale multi-view stereopsis evaluation [2014]

R. R. Jensen, A. Dahl, G. Vogiatzis, E. Tola, H. Aanæs2014 IEEE Conference on Computer Vision and Pattern Recognition , 406-413